Droplet in action

Before diving into the narrative, here's a video of Droplet doing its thing. Getting here was a long road and this article tells the story of how we did it. It'll talk about our engineering process, how we tested components, designed sub-experiments and the mistakes we made along the way.

Some logistics

This article is a companion article to an RSS2021 paper that is freely available here. Where an academic article focuses on only things of academic interest - expansions of what is possible - this article does a deep dive into the engineering process of actually implementing the first step towards autonomous underwater construction. If you use anything from this article please cite the academic paper. Anyone interested in replicating this system in whole or in part (like the redone main electronics tube) is invited to send me an email and I'll help you get your system running. CAD files and software are available on Github. Now, enjoy!

The big idea

The broad goal of this project was to establish a solid first step towards more practical autonomous underwater construction systems. The general idea is that the robot will be fitted with a gripper designed to manipulate blocks (or bricks). It will unload the blocks from a pallet and stack them according to a known construction plan -- like a 3D printer or CNC machine. The construction plan could eventually represent a sea wall or the boundaries of an artificial harbor. The basic ingredients we need to make the system are easy to state:

- The robot needs to know where it is

- It needs to be able to position itself pretty well relative to stuff (blocks, the pallet of blocks)

- It needs to be good at picking up and dropping blocks at the right places

- It needs a place to pick blocks up from and a place to put them down on

Our prototype system, called Droplet, implements these core capabilities in the most basic way that we could think of. Even so, building it required just over a year of effort. We went through two major iterations of the system. The first could stack up to three blocks successfully, or about four repetitions of picking and placing a block in the same place. The second was able to pick and place a block successfully 100 times with no error.

The rest of this article will talk about how all of the major components of Droplet were designed, including how the first failed iteration's shortcomings informed the much more successful second iteration. Much of this might be obvious to a seasoned systems roboticist but I sure would have loved to read something like it a year ago!

A pretty good first try

Our first iteration of Droplet was based on a stock BlueROV2 heavy with no modifications to its internal electronics. Its manipulator was a newton gripper with 3D printed finger modifications zip tied on and facing forward. The blocks were fitted with ARTags from ARTrack Alvar which were used to provide relative-position information to the robot. Other ARTrack alvar tags were scattered behind the PVC tables were used to provide global location information through a basic SLAM algorithm. The controller was a cascaded PID controller where the higher level PID controller issued RCOverride commands, simulating a pilot with a joystick. Here it is in action:

We'll go into detail about all of the shortcomings of this system, but we can see many of the shortcomings right off the bat:

- the robot overshoots every move it makes

- it cannot hold its pitch steady

- it fails to pick the blocks up properly because of the poorly made gripper

- the error correcting features on the blocks can't handle the amount of pre-drop position error

Hardware Platform (inside the main tube)

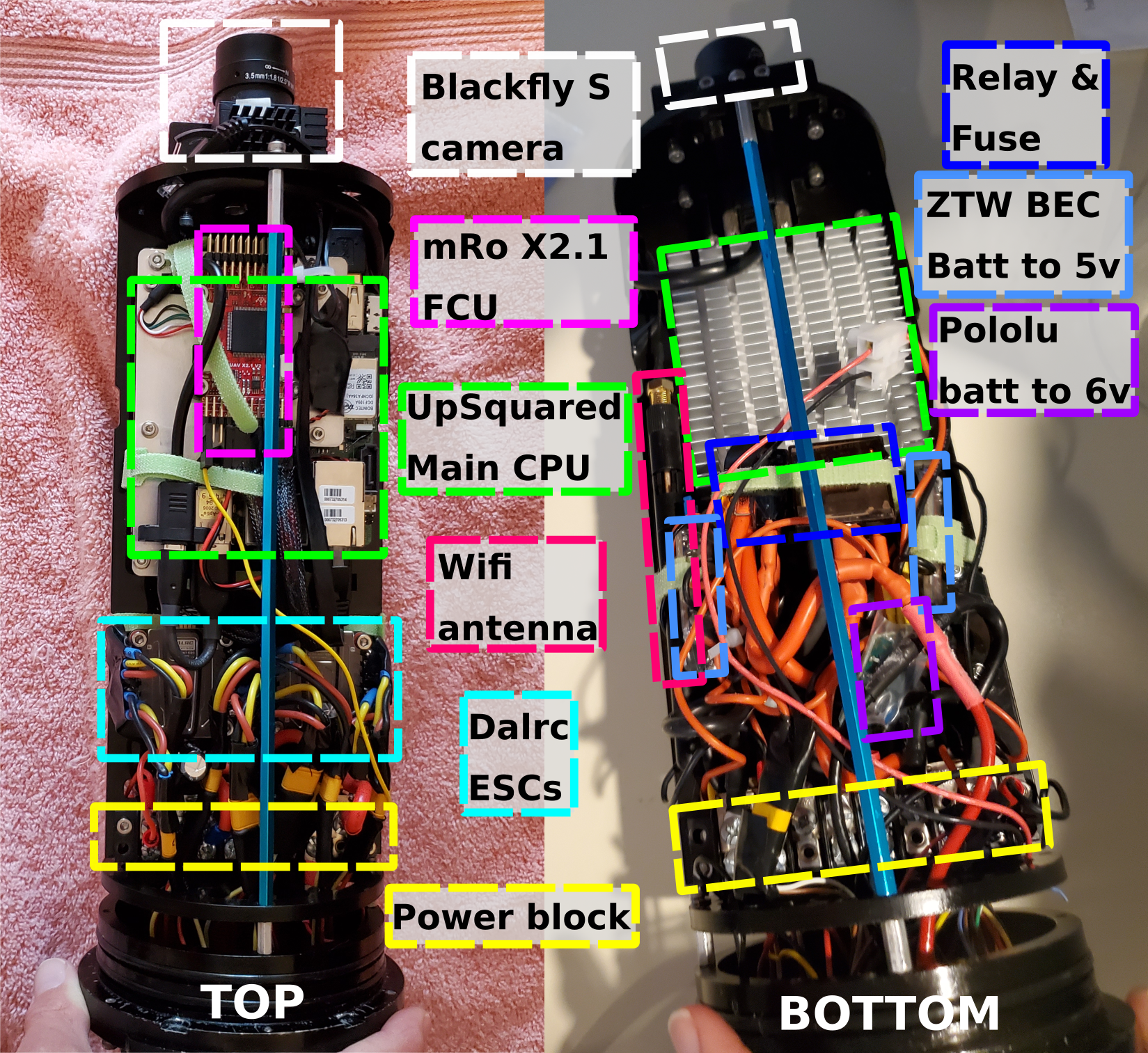

To achieve a reliable, autonomous system we ended up replacing every single part of the main electronics tube (except the power terminal block). CAD files to replicate our mounting hardware for all the new electronics are here. We kept the basic premise of the flow of connections between components the same as the stock BlueROV but used higher quality components designed more for autonomous robots. Attempting to use the stock hardware and software for the BlueROV quickly exposed how different it is to design a good platform for an autonomous robot vs designing something to be teleoperated. In short: people are way smarter and more adaptable than software so little things like mediocre image quality, poor latency and lack of easy configurability can be smoothed over with our big old brains. I'll dive into each component of the system below and describe how we started, what the problems were and how we fixed them.

Camera

We started out with the stock BlueROV2 camera that comes mounted on a little servo for tilting. The camera streams images through a GStreamer pipeline defined here. The pipeline starts out using v4l to pull frames off of the camera, puts them in a queue, then chunks them up into packets to send out over the tether. Somewhere in the pipeline, it appends a timestamp to the image frame that doesn't necessarily exactly correspond to when the frame was captured. We built a ROS node that uses another GStreamer pipeline to read frames off of the tether.

The LowHD (stock BlueROV) camera is an h264 webcam first and foremost so it uses hardware acceleration to compress frames as they are captured to make sending its stream over the wire efficient. This is great for people because we handle slight noise in images well. The story is not the same for localizing based on visual fiducial markers: h264 compression causes compression artifacts. One particular artifact, called ringing, causes fuzz around sharp changes in colors in an image. Ringing plays havoc with visual fiducials which usually depend on the stable localization of four corners of a black square on a white background. Small perturbations in the software's idea of where the corners of the marker are lead to big noise in the robot's position relative to the marker. Increased position noise can lead the robot to make mistakes when dropping blocks!

In addition, we found that there seems to be an inherent 0.1-second latency when pulling images off of the camera. This persisted even when we captured frames without the GStreamer pipeline. Our guess is that there is some sort of queueing of frames going on in the camera's hardware before or after the compression takes place. Or perhaps it simply takes the camera 0.1 seconds to compress a 1920x1080 image. The problem persisted even when using v4l's configuration tool to reduce the image size or to read the frames in a raw image format. For a person, an 0.1-second latency is nothing, especially if they aren't trying to do anything detailed. For software, it is hard to design an effective controller when the reaction to a change in your control input is only apparent 0.1 seconds after you take the action.

The tilting servo also caused more problems than it solved for us. Properly localizing relative to visual fiducial markers requires a very accurate idea of the camera's extrinsics relative to the robot's center of mass (or the point on the robot you control based on). Particularly important is understanding how the camera is angled relative to the chassis since visual fiducial markers have to transform location readings coming from the camera frame into whatever frame you control based on. Small errors in the camera's tilt can lead to very wrong ideas of the robot's relative position to a marker when the robot is a meter away. Think of a right triangle with a one-degree angle between the hypotenuse and the base. As we increase the length of the hypotenuse, the length of the shorter edge also increases.

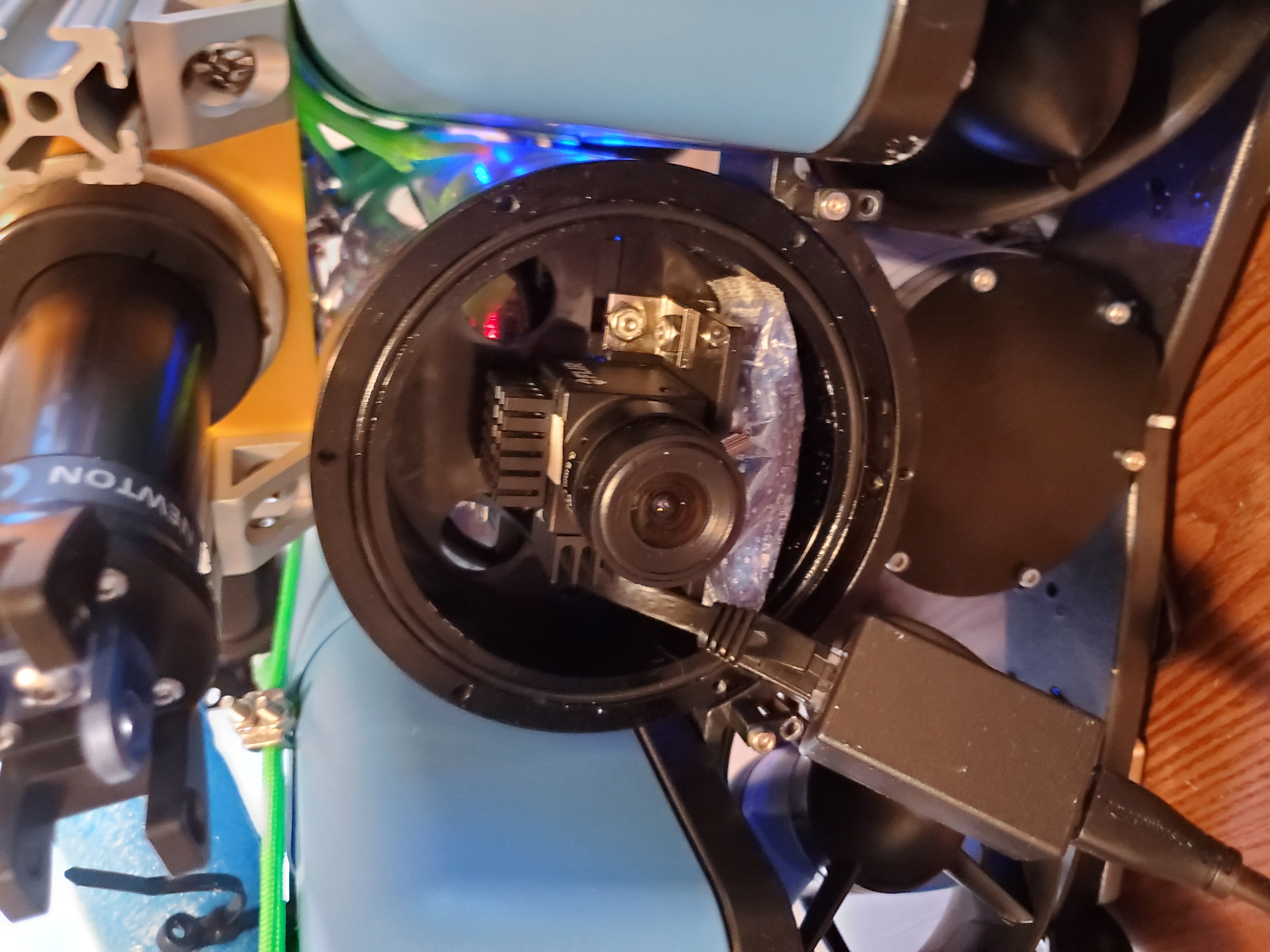

We solved these problems by switching from the stock BlueROV camera to a camera designed more for computer vision: a FLIR blackfly S. The camera is held in a fixed position. The Blackfly S camera is accessed using an excellent API which has a pre-built ROS driver we only needed to do small tweaks on to get running. Our fork of the spinnaker ROS driver is here. The Blackfly S allows Droplet to read raw monochrome image frames with low latency directly on its main computer which greatly improves localization performance (and thus the general quality of the system as a whole).

Main Computer

After changing the camera, the main bottleneck in latency became the speed at which video frames could be processed by the main computer. The Raspberry Pi 3B that comes with the BlueROV also lacks USB3 ports which makes reading frames off the camera just a little bit slower than it needs to be. Our first impulse was to use a Raspberry Pi 4 which offers more RAM, USB3 ports, and a faster processor. At the time, however, there wasn't enough support for Ubuntu 18.04 to make developing on the Raspberry Pi 4 worthwhile.

We ended up settling on an UpSquared for the main computer because it was just small enough to fit in the 4" enclosure tube while also packing a lot of punch for its size. Being x86 is an added bonus since there tends to be more library support from ROS for x86 computers.

Removing the tether

The BlueROV2 is a high power machine so the minor dynamic effects that the tether has on the robot are easy to adapt to for a human pilot. A human operator also has some intuition of how drag and currents work so they can learn quickly to change if something isn't working out. For a PID control loop, changing dynamics wreak havoc on the robot's ability to position itself. In addition, the tether makes it more complicated to transport and deploy the robot.

Instead of using a wired tether, we opted for having the robot host a wifi network to which the operator can connect when the robot is surfaced for loading and updating code and starting the software. This choice means that the robot has to be surfaced to interact with its computer in a meaningful way. We chose to have the robot host its own network so that it can be accessed in wifi-deprived situations, like, say, on a dive boat.

A simple UI for operation by swimmers

During one tank test of the robot after removing the tether, a software bug caused the robot to turn all eight of its motors on full speed and leave them there. We had no way to reconnect to the robot when it was submerged so someone had to jump in the tank and wrestle the robot to the surface while it bucked and flipped around, spraying water all over the lab. Having no way to interact with the robot while submerged was a nonstarter.

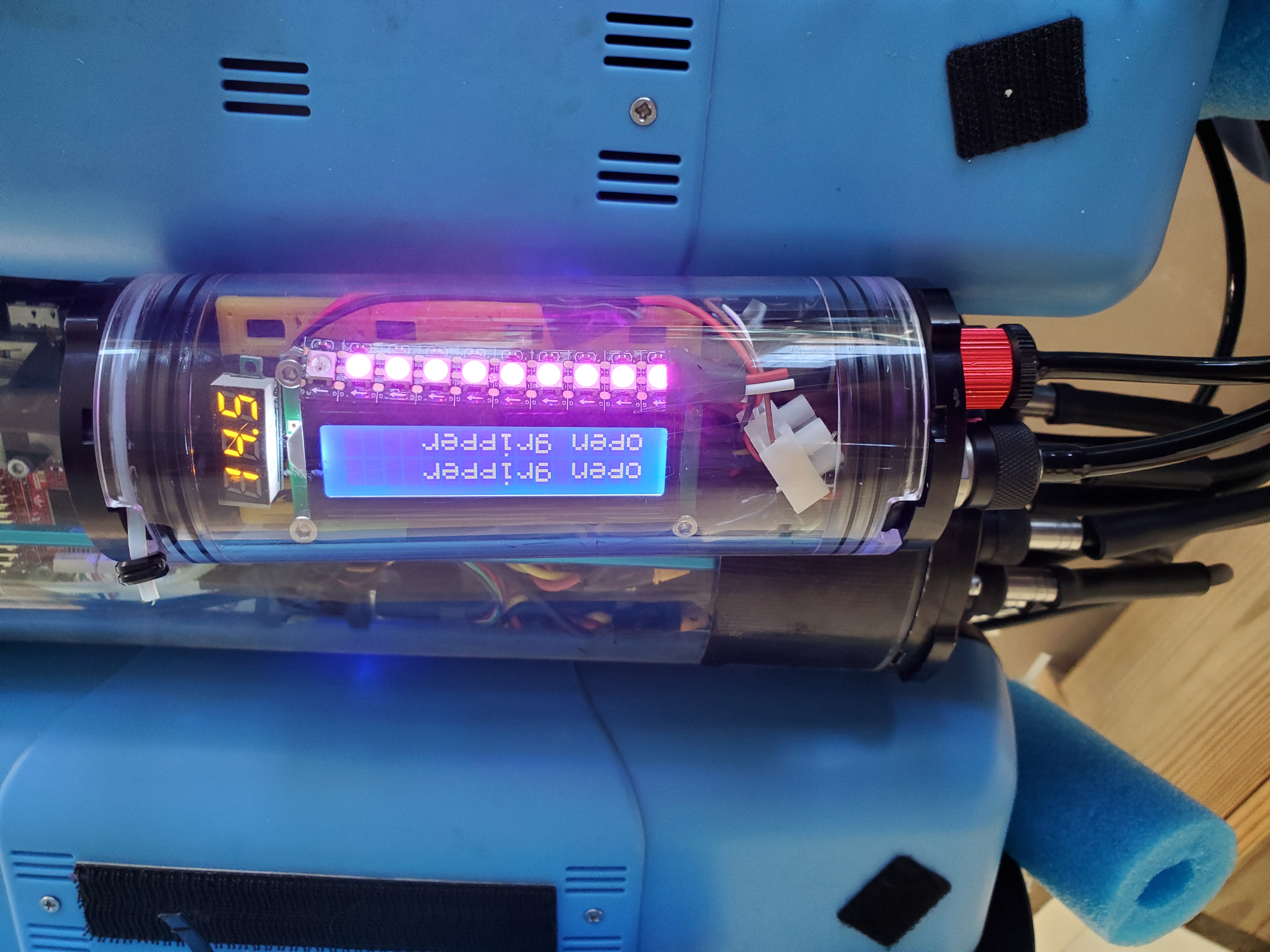

We developed a simple user interface that most importantly features a kill switch wired to a relay that can completely power the robot down using one of the blue robotics switches. The UI also features an LCD screen and LED strip driven by an Arduino Nano. The UI is connected to the main computer through a USB serial connection. We found the four pin connectors from bluetrail engineering to be awesome for making this bridge. No potting! A big thank you to Zachary Zitzewitz for doing the wiring of the electronics / initial programming for this UI!

ESCs

The BlueROV2's brushless thrusters are driven by little PCBs called Basic ESCs that convert a PWM signal from the Pixhawk's thruster pins into the details of activating the magnets in sequence to drive / accelerate the thruster's propellers. For each thruster, there is a distinct ESC which leads the ESCs to take a large amount of the space in the enclosure. To save space, we moved to two 4-in-1 ESCs called DalRC Rocket 50A. The 50 amp current limit on these ESCs means that the robot could harm itself if, for some reason, four motors are on at full tilt. Droplet does most of its work only gently using the thrusters so this limit is not prohibitive. To keep the robot from melting itself in an emergency, we added a 60 amp maxi fuse into the power line.

FCU (flight control unit)

Because the UpSquared takes up more space than a Raspberry Pi, we had to go with a smaller FCU unit for bridging the gap between the main computer and the thrusters / servos. We chose an mRobotics X2.1 FCU which supports the ArduPilot stack out of the box and also claims to have improved IMU sensors relative to the standard PixHawk that ships with the BlueROV.

Control System

Our initial attempt at a control architecture was to try and keep as much of the original BlueROV2's ardupilot-based control system intact as possible. We would control the robot using a cascaded PID controller where the higher level PID controller utilized RC override messages through mavros. We tried controlling the robot using this style in several of the modes but got the best results in the GUIDED mode. Using GUIDED mode without an external localization source required editing the ArduSub firmware.

We achieved limited success using this control strategy. A primary challenge was debugging whether weird behaviors were coming from the higher level PID controller or one of the ArduPilot ones. This problem was exacerbated by how complex ArduPilot's controller code is to read and the limited ability to get debugging info off of the FCU. We ended up adding "print" (GCS.send_text() calls) into the FCU firmware trying to debug the problems.

An additional layer of complexity in the controller also comes in when you consider the details of how a "PWM" signal emitted from the PixHawk is interpreted by the microchips that translate the signal into a thruster speed. The chips are called "ESCs" and are driven by a closed source driver called BLHeli. The driver exposes a ton of configuration parameters that are difficult to interpret the meaning of. Basically, the ESC driver interprets the PWM signal as a "throttle position" and the driver manages all of the nitty-gritty details of accelerating the brushless DC thrusters. All of this means that given a PWM signal coming out of the autopilot it isn't clear what exactly the thrusters are going to do. Another confounding source of complexity is that each motor has a different response to PWM values sent to the ESCs. Each motor starts spinning at a different PWM value and the value is different in the forward and reverse directions.

A simplified control system

Achieving autonomous execution of behaviors that require control much finer than that usually required of a ROV required reimagining the control system. To that end, we created a drastically simplified control system. We replaced the blue robotics BasicESCs with more capable 4-in-one ESCs that allow configuration via a GUI which made it relatively simple to change the ESC configuration to be more suited to what we need. We also developed a hugely simplified firmware for the FCU. The firmware is so simple that it effectively demotes the FCU to an IO board running the mavlink messaging protocol. Rather than using the joystick-based mapping between RC override channels and the motors, we made each channel reference a distinct motor. Other than performing some basic safety checks, the firmware simply forwards the speeds given in an RC override message to the ESCs. The Firmware we developed is called SimpleSub. It's on GitHub here.

The main mission command and control software runs on the AUV's main computer in ROS nodes. A major benefit of having little complexity in the FCU is that everything that goes into making a decision for the robot is recorded in the form of ROS messages and can be replayed as a bag file. The PID controller on the main computer that directly controls the thrusters adopts its structure from ArduSub's PID controllers.

I developed this new control architecture during the beginning of the Covid-19 pandemic when things were most disorganized. As a result, I had no access to a pool. Luckily, one of my advisors offered a carport where I could use the 6-foot tank shown in the video below. Because it is only just deep enough for the robot to float, I was limited to testing the robot in only three dimensions (x,y,yaw). This test setup proved to be sufficient -- it allowed me to develop a controller that worked well using only the bottom four thrusters of the robot then generalize to the top four for the z,roll, and pitch axes of the robot's position. The video below shows an example of the type of control tests I used to evaluate the controller.

The Manipulator

This manipulator was the first serious CAD design project I've done, so the quality of the implementation tracked my ability to design CAD models / get things fabricated.

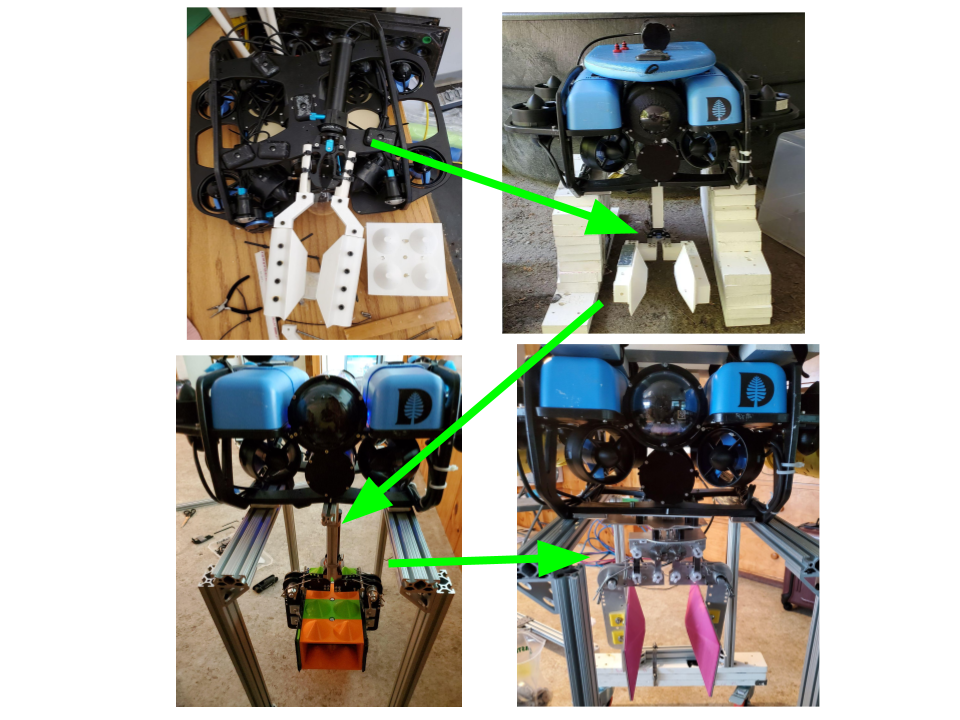

The initial iterations (top half of the figure) were based on a barely modified Newton gripper. The very first iterations simply used zip ties to attach printed fingers to the Newton gripper's provided fingers. This was easy to implement but getting the finger attachments to stay on reliably was a major challenge. Even slight loosening in the zip ties made the fingers drift around which ruins any estimated extrinsics. In addition, the fingers were mounted using the default Newton gripper mounting configuration. This made the extrinsics even harder to deal with.

In the following iteration, we had some custom fingers milled from aluminum for the newton gripper which featured four #6 mounting holes allowing 3D printed finger attachments to be robustly attached. Mounting the gripper was still a challenge. We cycled through several ways to attach the gripper to the bottom of the robot but it was difficult to both make the extrinsics easy to work with (finger grasp center near the robot and low down). In the end, we determined that the side grip style was simply not the way to go if we wanted an easy to work with grasp configuration.

This insight led to the first top-down grasp style manipulator / block combo (bottom left in the figure). The top-down grasp configuration had the benefit that it was possible to mount the Newton gripper directly below the robot's center, which made the extrinsics extremely easy to work with. The top-down configuration led to much larger laser-cut Delrin fingers which were heavy enough that they put strain on the Newton gripper in ways it wasn't designed to handle.

The Newton gripper seems like it can't handle large weights weighing the fingers down consistently while it is trying to open. We had several failures of the internal ESC that drives the Newton Gripper's brushed DC motor. To combat this, we tried several different gear ratios: 70:1 and 120:1. The 120:1 motor broke the pin which couples the threaded drive shaft and the motor shaft. The 70:1 motor proved to be much more reliable but added strain to the fingers, making it impossible to rely on the built-in current-consumption-based stopping mechanism.

These issues led us to design a fully laser-cut 6061 aluminum manipulator which is actuated using servos designed to be used in a high-control and high-reliability situation. We adopted the Bluetrail Engineering servos and designed our 2DOF manipulator using a simple 2:1 gearbox for the wrist rotation and a 3:1 gear ratio for actuating the fingers using a parallel linkage. The video below shows the best iteration of the gripper in action!

That was the manipulator that was finally used for the paper. There are several issues I'd like to correct with its design for future iterations. The wrist mechanism is a simple friction fit shaft coupled with a flange mounted shaft collar. When bumped vigorously, the wrist can slide without turning the servo, which made it annoying to continually reset it between runs.

Deployment workflow

One thing there is definitely not any time to focus on in a 6-page conference paper is the details of how you would actually manage the test-modify-test loop inherent to running a robot in the real world. With underwater robots, this process is particularly hard to get down. Unless you have one of the new light-based communication devices, you can't just shell into the robot while it is running and watch print statements. Instead, you need to be more deliberate about how you deploy things. Because the robot is only available over a wifi link when its main tube is in the air, it is inaccessible during a run, meaning you are left with only the LEDs and LCD as feedback into what it is doing at any time.

The basic test-modify-test loop is pretty simple: give the robot some code, run it, pay attention to what it is doing, stop it if it seems stuck, surface it, tweak the code, run it again. The multitude of interacting factors that led to a specific behavior make it challenging to determine by eye what is going on so a lot of the iterations tend to be something like "increase the I gain by 0.05", "now try 0.075" and so on.

It turned out that effectively supporting this test-modify-test loop was essential to rapidly making progress with the robot so I spent a lot of time figuring out the right infrastructure to make it happen.

Updating software

Because the run-test-run loop primarily takes place in a pool, I needed to be as efficient as possible about how I used the time. Initially, I directly edited and ran code on the robot then would copy it back to my laptop to upload to GitHub and work on in the evening. This quickly led to the predictable de-sync problems where I'd launch the robot running code I didn't think it was running, which makes debugging a nightmare.

I explored a Docker-based approach where you'd build an image on my laptop then send it over the wire to the robot and launch it but I had trouble getting it to properly support the USB camera with a low latency and the images were huge, which made uploading the code over the robot's 2mbps wifi connection a nightmare (and worse really than just editing the code straight on the robot).

Walter Andrews ended up giving me a dead-simple way out of this bind: compile the whole catkin workspace on my laptop which is much more powerful than the robot, then tar the whole install directory in the catkin workspace and send that over the wire to the robot, and unpack it into the robot's catkin workspace. Since my laptop runs the same x86 version of ubuntu (18.04) as the robot, as long as they are in sync with installed dependencies, installation of modified code takes less than ten seconds and there is no ambiguity about which version the robot is running!

I wrapped this process into a bash script that also switches the computer over to the robot's wifi network and handles all of the steps to deploy a new catkin workspace, making deployment as simple as running a single bash command. The script isn't as general as it might be (it has hard-coded paths, but I'm a researcher so who cares right?) but you can see it here.

The physical deployment process

The testing for Droplet occurred in pools that were used for other purposes most of the time. This meant that we needed all of the infrastructure to be mobile and as easy to set up and put back into storage as possible. Luckily, I had access to storage near the pool where Droplet was primarily deployed and tested.

I added hinges into the build platforms so that they could easily be folded and put into storage and fit through standard-size doors. I added custom ergonomic handles to the bolts that are used to level the build platforms to make them as fast as possible to adjust so I could get to doing other work.

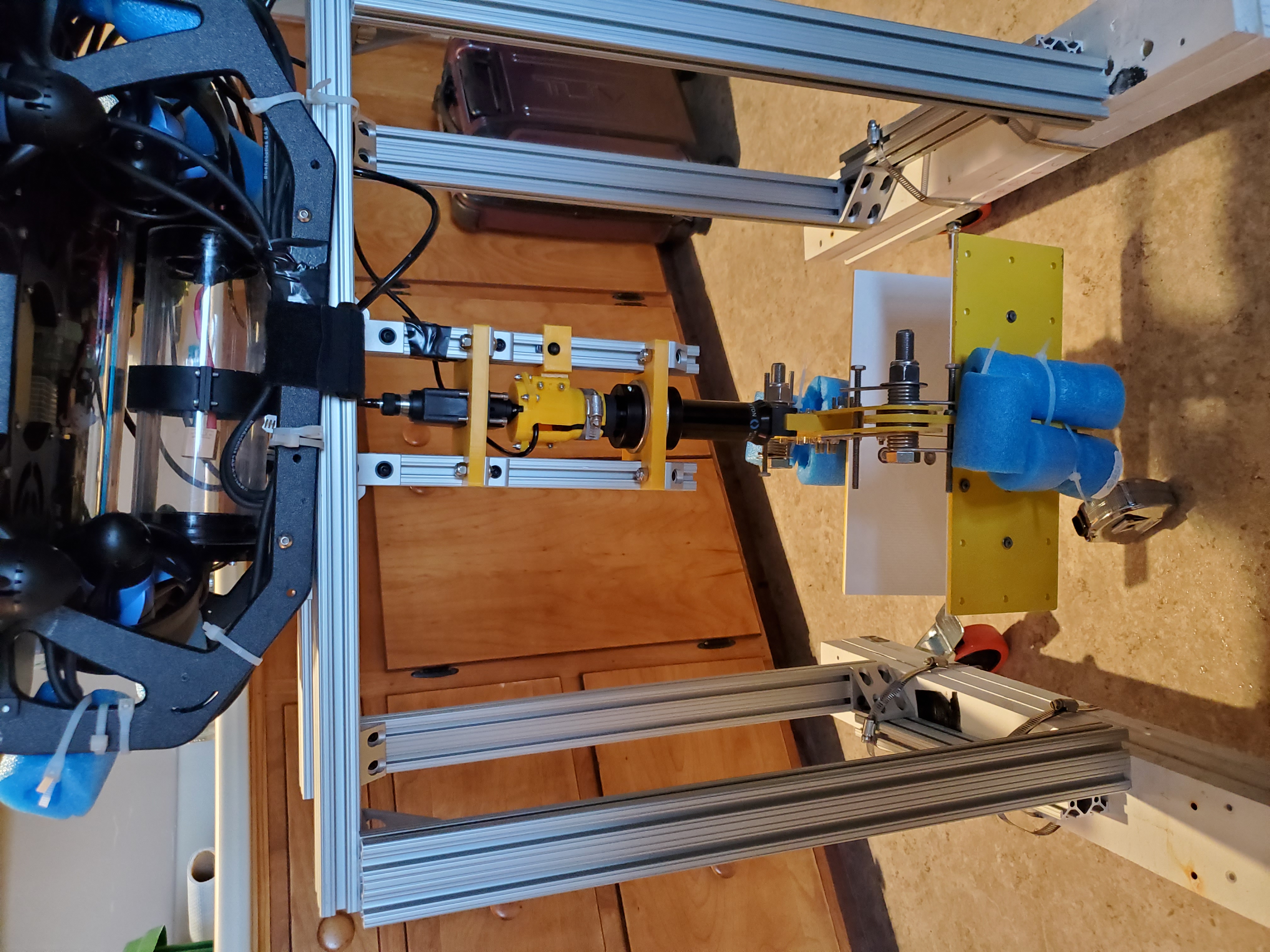

To make the between-run time as simple as possible, I added a hook tied with loose ropes on the top of the robot that fits onto the hoist you see at the bottom of this section's figure. The hook is set so that the robot's main tube is held just out of the water and the whole thing is mounted on wheels for easy transport.

For storage, the robot lives on a wheeled cart that is designed with space for the manipulator to hang below. Lifting the robot onto this thing and into / out of the water is the riskiest part of the deployment process. It is still better than the alternative: removing and re-attaching the manipulator between each run. Little changes in the manipulator's mounting position compound positioning problems and need to be avoided at all costs!

Updating robot libraries

Because Droplet uses its wifi card to host a wifi network for access, connecting it to the internet to install dependencies requires cracking the waterproof enclosure open to connect physically to an ethernet cable. I made this process as painless as possible by running a thin ethernet cable up through near the camera, allowing the computer to connect to the internet while only removing the dome.