As a part of ongoing research efforts in autonomous underwater robotics, we at the Dartmouth Reality and Robotics Lab and the Autonomous Field Robotics Lab are developing a low cost AUV platform based on the electronics tube from the Droplet AUV to meet the extremely niche set of needs required by research in underwater autonomous field robotics. Working in underwater robotics feels like being split between the 1980's and the 2020's. The computers, cameras and algorithms available for the internals of an AUV are accessible and easy (relatively) to work with but the hardware like penetrators, thrusters and the deployment workflows feel more like early days of robotics. You can't just buy an AUV for a reasonable amount of money and expect a nice programmatic interface for moving it. You can't expect the details of code deployment, or operation of the robot to have been thought out at all. It's my goal in writing this blog post to start to break down this wall to wider AUV research and deployment with AUVs you don't need to be a government agency to afford. Such an AUV could even enable autonomous applications in industry where things are (to my knowledge) still mostly done with ROVs.

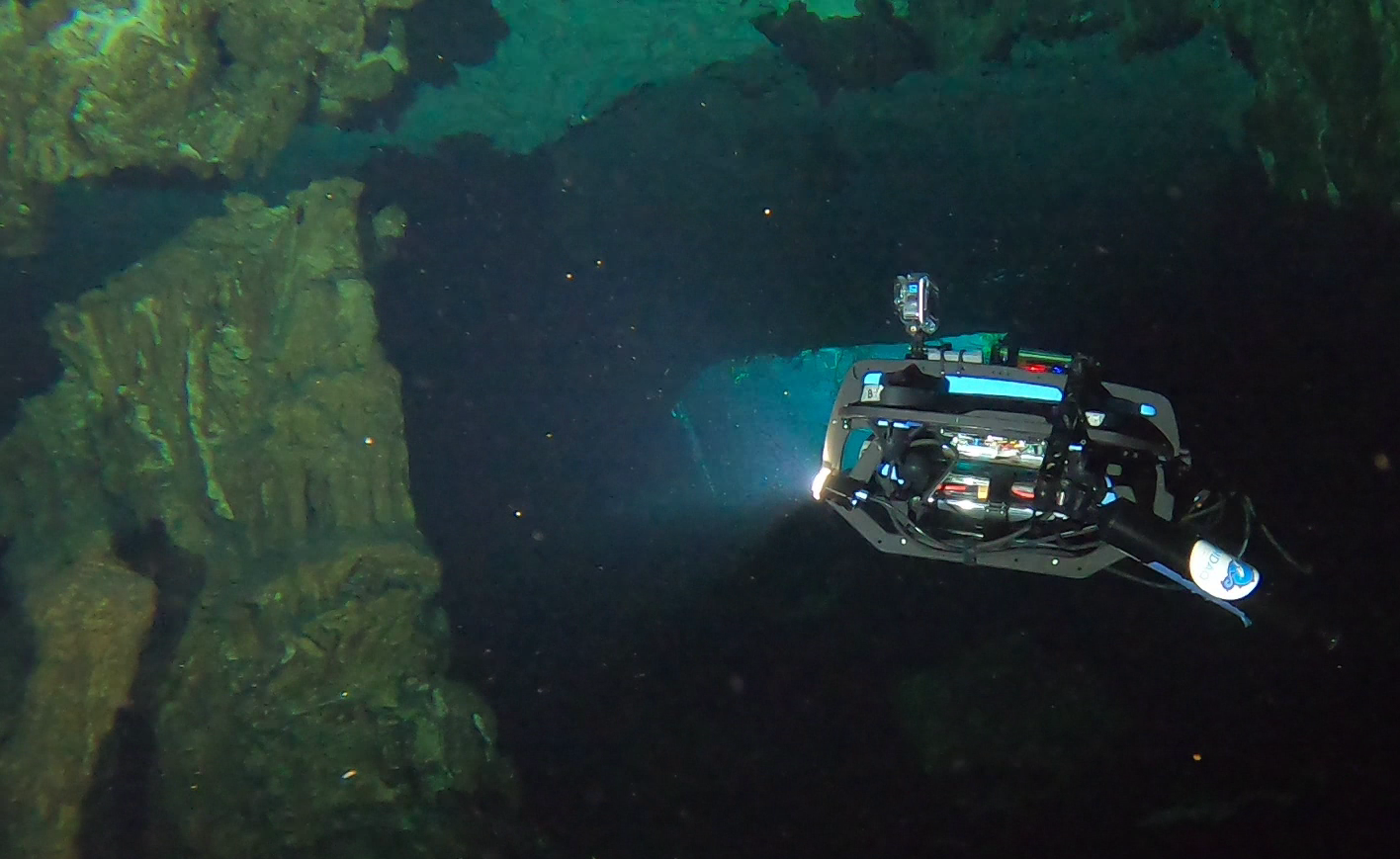

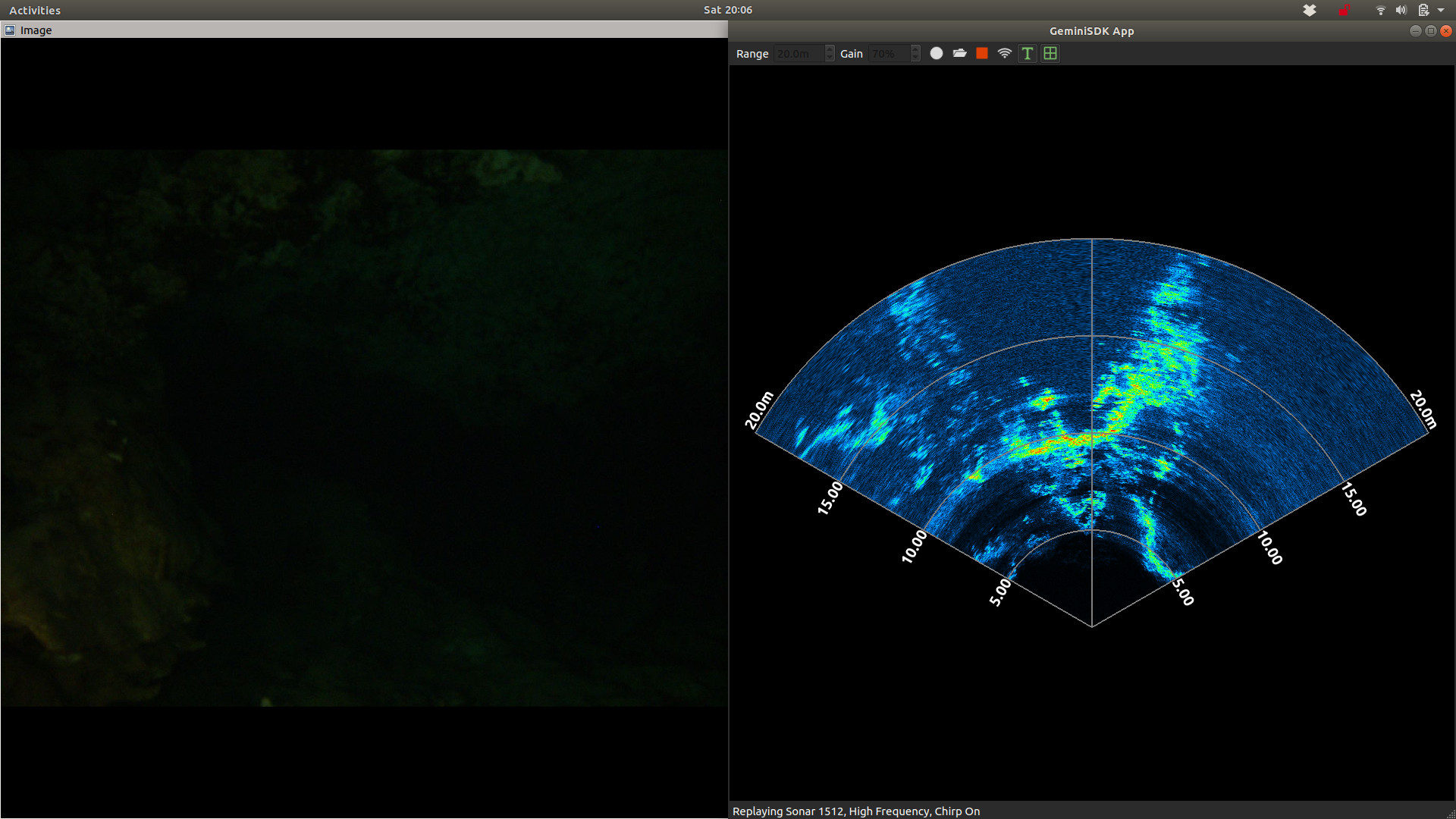

We took the latest iteration of the Droplet AUV to some beautiful cenotes to collect data for a few of our research projects as a part of a science week organized by Centro Investigador del Sistema Acuifero AC (CINDAQ) and the Mexico Cave Exploration Project (MCEP) in Puerto Aventuras. The field excursion was a trial by fire of sorts of our latest iteration of the AUV and showcased some important capabilities of the unit and also some shortcomings. First, I'll describe the workflow we are supporting, then I'll dive into a detailed breakdown of what went wrong and what worked and make some suggestions for next steps in the platform.

System requirements

To support our low cost AUV research, we need an AUV that:

- Can be packed into a suitcase and taken on as regular checked baggage in a normal flight

- Can be carried by one or two people over rugged terrain

- Can be operated and debugged in situations where there is no access to internet or electricity

- Can operate without a tether or any connection to a surface computer

- Is safe enough to be carried to deployment locations underwater by divers

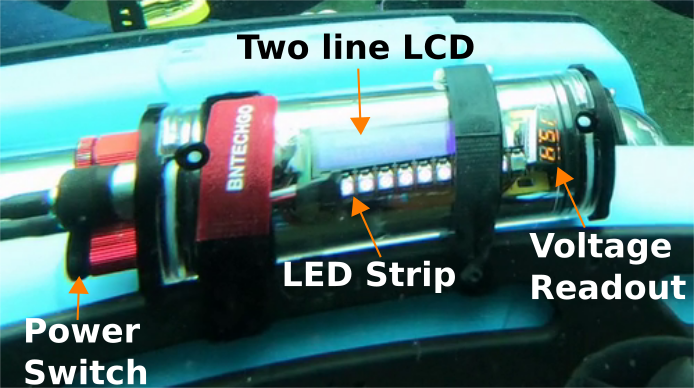

- Provides some feedback to divers about its internal state (why isn't this thing doing anything?)

- Provides some basic human-robot interaction capabilities so divers can tell it what to do

- Have a decent quality, configurable, machine vision camera

- Have computers that are fast enough to run the algorithms we are working on in real time

- The person diving down with the robot may not be the one who wrote the code. There's gotta be good enough feed back to the operator that they can make on-the-fly decisions about the experiments they are running.

To our knowledge, there isn't anything out there that meets this set of needs which means that platform issues constantly hold back this field of research. Next up, I'll describe our current state in meeting this set of needs.

Our implementation

The strategy that seems to be working well for us is sticking with the BlueRov2 Heavy Chassis (which is light and strong enough) and focusing on the electronics internals / code libraries.

The Hardware

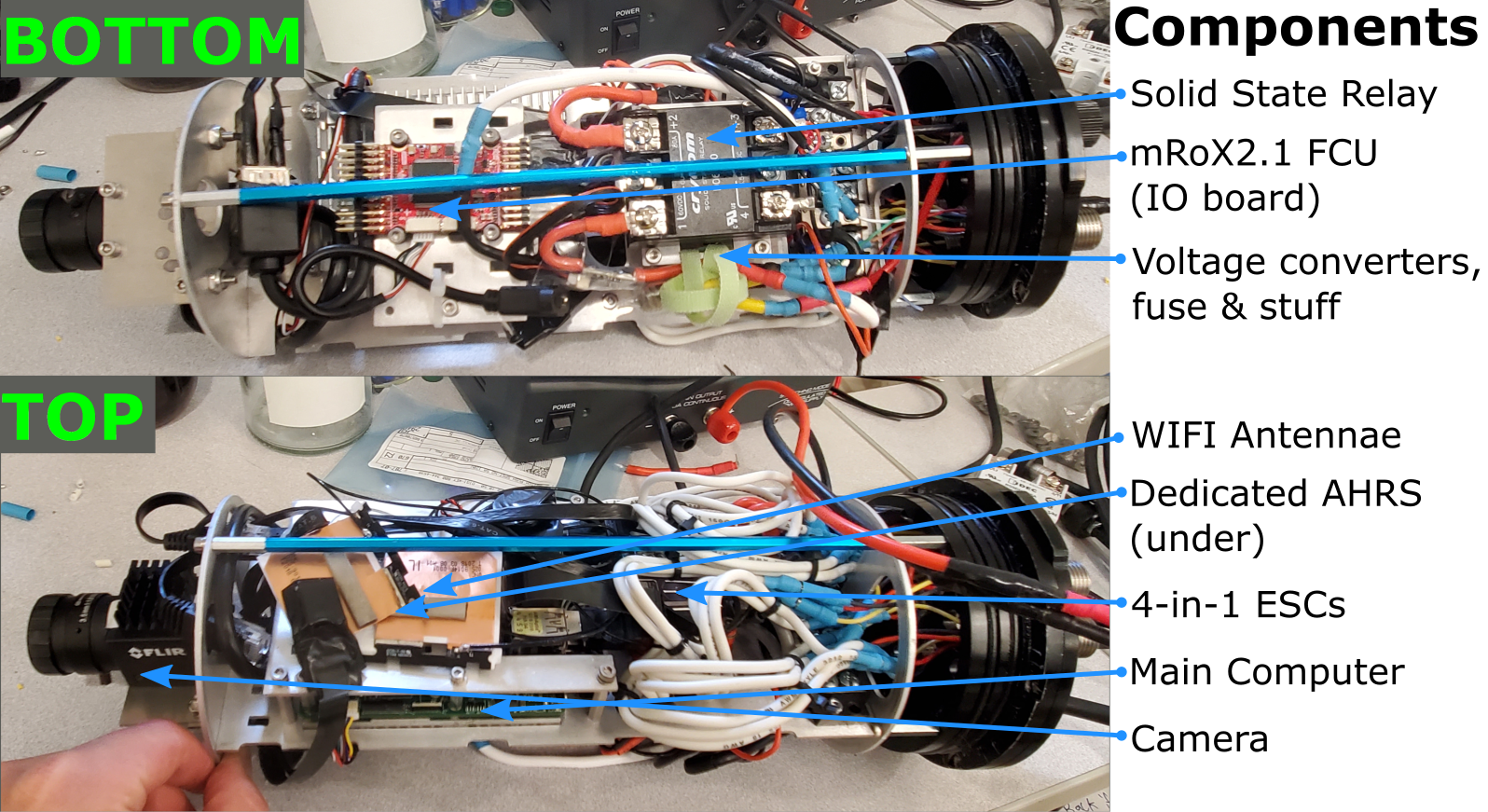

The hardware is similar in spirit to the one I talked about in

my previous post

with a few upgrades:

The hardware is similar in spirit to the one I talked about in

my previous post

with a few upgrades:

- Industrial quality AHRS for improved stability. An Inertial Labs MiniAHRS.

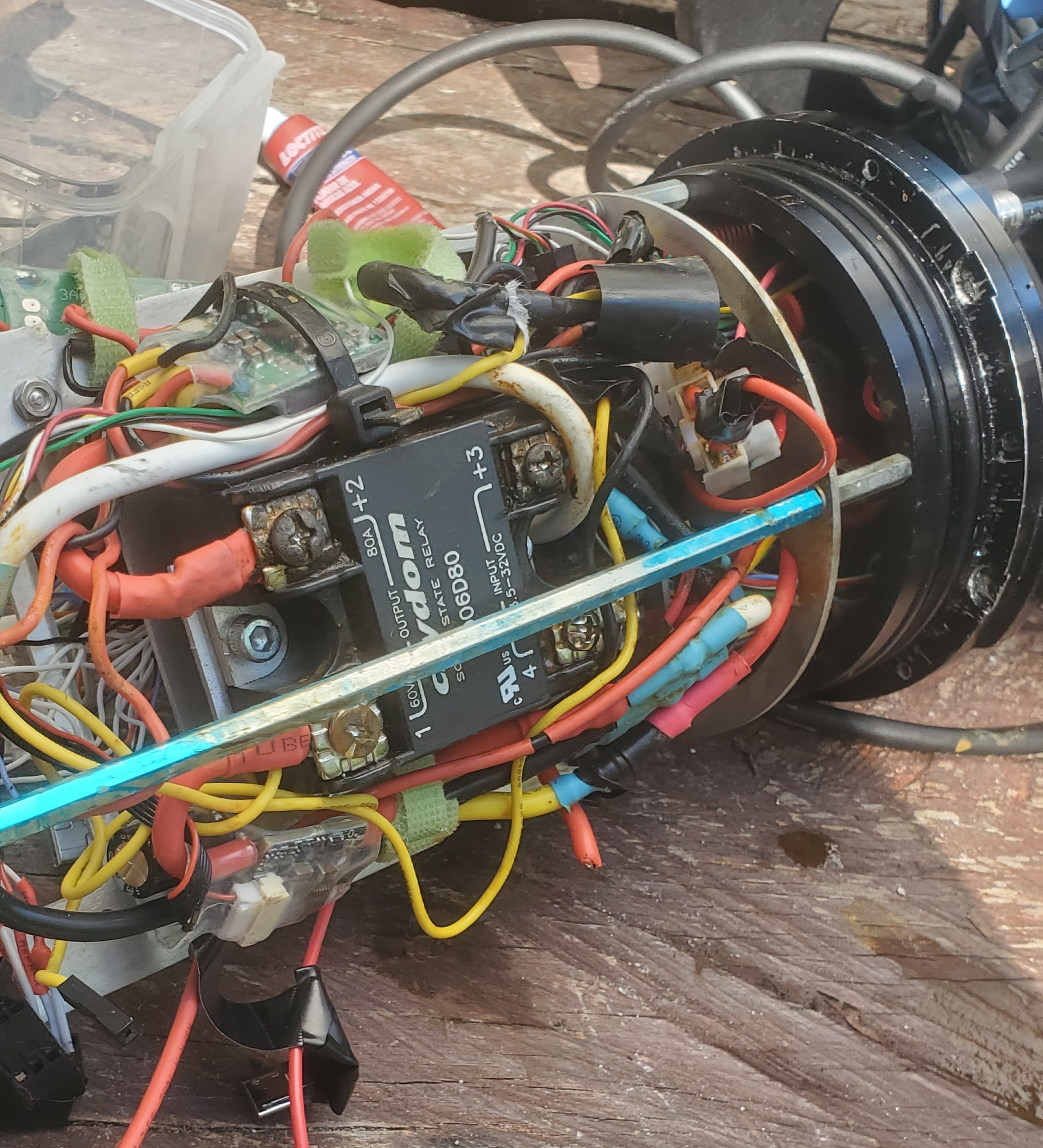

- Solid state relay instead of electromagnetic to limit interference with the compass

- Aluminum mounting plates to save space

- Hot-swappable tethering using a Bluetrail Engineering 8-pin connector for optional teleoperation.

The code

The code is spread out over the FCU, an mRobotics X2.1, and the main computer, an upBoard upSquared. In the BlueROV2, the FCU is responsible for most of the reasoning about control algorithms and even waypoint navigation. The FCU boards and firmware are first designed with drones in mind, so an extremely tight coupling between the onboard sensors like the imu and others is essential to keep the drone from falling out of the sky.

There's a tradeoff to putting complexity into the FCU, though. To update the code, you need to write c++ then flash the firmware. The logic on the firmware is difficult to simulate and debug using standard ROS tools. Writing, compiling and flashing a c++ codebase that operates on a resource constrained tiny little computer is a no go when time is short and you are being eaten alive by mosquitos next to a lake. Thus, we decided to demote the FCU to more of an IO board that streams sensor data and provides an interface for commanding the thrusters. The code that actually controls the robot runs in python at about 40hz and does just fine at the speeds it needs to move around divers. It has the bonus that it is a ROS package so you get all the nice debugging and logging tools that come with the ROS ecosystem.

System Capabilities

Field Debugging

Underwater Operation

The thing about developing new algorithms for autonomous robots is that they often don't work and when they don't work the robot can easily damage itself. Thus, at least for now, we always send a team of operators down with the robot who control what code is running and intervene if things go wrong. The operators are equipped with a few fiducial markers on a carabiner. When the robot sees a marker, it initiates the behavior that the marker's ID is associated with.

We use the UI to show whether the robot is in a state that it's ready to do work or receive commands. We tried deploying with no display previously and it led to lots of time where divers were waving the behavior markers in front of the robot or even dives where the robot wasn't actually logging data when we thought it should have been. There's also something to be said for the psychological effect of having a good display especially when taking the robot down into a dangerous environment. Without a display it feels like holding a buzz saw that could kill you at any moment vs a tool you have some power over when it gives you some visual feedback.

Tetherless Autonomous Behaviors

This iteration of the AUV can support simple, programmatically defined autonomous behaviors. It uses a PID controller fed with pressure, absolute roll & pitch from gravity, yaw from compass and angular rates from the gyros. Most of this is coming from our MiniAHRS unit. When a behavior is started, a depth and heading (yaw) setpoint is initialized as the current readings then lateral (XY) motions are mixed in using a hard coded error value. The behaviors execute for a fixed amount of time. You can chain the behaviors together to make the robot move in shapes! See the video above.

These autonomous behaviors are mostly a placeholder, but they were useful to validate our workflow. For future iterations, we want to incorporate some approximation of lateral motion, maybe using VIO or a DVL. That'd close the loop experimentally, so we can test navigation software.

Wins, Losses & Next Steps

Win wifi-based shell access

This workflow was essential to getting our work done without having to connect our tether. We used it to reconfigure the code without taking the robot out of the water. This is one we will stick with.

Win Stability during autonomous control

The autonomous behaviors worked well: the robot was stable and moved gently enough for an operator to intervene. We'll keep the basis we have and add some approximate of lateral motion in there to close the loop.

Win The display made everyone more comfortable around the robot

Since the robot was operated by trained cave divers who weren't roboticists, it made everything more smooth to be able to tell them "If the display is not flashing its lights, try rebooting the robot and wait a bit for the lights to flash", it made everything feel less scary. It also smoothed out the deployment process: before descending, the divers could verify everything was working.

Loss Hardware robustness

During the last day, some part of the penetrators or O-rings gave up the ghost. A little pool of water formed in the bottom of the electronics chamber and short circuited all four of the leads on the relay which corroded most of the wiring on the bottom half of the robot. We need to figure something out that improves how the robot handles a slow leak. Maybe rotating the electronics 90 degrees.

The stress of transport also caused some of the internal wiring to fray and become unreliable. This meant sensors weren't working properly during one of our deployments. Attention to quality wiring and locking connectors inside the tube could eliminate this failure condition.

Loss The AR-Marker style of diver input

The AR-Markers are easy to implement and work nicely with the robot's sensor suite and capabilities. No additional hardware to support them! Though they are technically a natural choice, they are sensitive. The diver has to position the marker just in front the robot: not too close and not too far away. The diver also has to ensure the robot doesn't accidentally see the wrong tag. In open water this isn't really a problem though it still leads to a lot of precious time spent fumbling around. In a cave, it is a no go. There just isn't room to position safely to show the robot markers.

Next steps

- In the main electronics tube, eliminate unnecessary connectors between components. Where absolutely needed, use something with a locking mechanism.

- Explore heat management options for the main tube. Maybe an aluminum tube? Perhaps using higher temperature O-rings? In the worst case, even cutting the computation power of the main computer.

- Look into better interaction methods for the divers to control the robot than the fiducial marker carabiner method we used this time.

- Find some simple but good enough way to approximate lateral velocity or position.